Introduction

In the realm of research and data analysis, few concepts are as pivotal—and as misunderstood—as statistical significance. Exploring the myths and misconceptions around statistical significance not only illuminates the intricate world of data interpretation but also equips researchers, marketers, and decision-makers with the tools they need to draw ethical and accurate conclusions from their studies.

Statistical significance is often viewed as a golden standard, deemed necessary for reporting findings. However, the reliance on this single measure can lead to misinterpretations, misguided strategies, and ultimately, faulty decision-making. As we delve into the intricate fabric of exploring the myths and misconceptions around statistical significance, we aim to empower readers with clarity, correct common fallacies, and inspire confidence in their analytical capabilities.

The Foundation of Statistical Significance

Before we can tackle the myths surrounding statistical significance, it’s essential to establish what it is. Essentially, statistical significance helps researchers determine if their findings are likely not due to random chance. This is usually represented by a p-value. A p-value lower than a pre-specified threshold (commonly 0.05) indicates that the null hypothesis can be rejected in favor of the alternative hypothesis.

Common Misconceptions

-

Myth: A p-value of 0.05 is a Hard Boundary

- Reality: While 0.05 is a common threshold, it is arbitrary. Studies have shown that the value of p should be interpreted within context and should not be considered a definitive cutoff.

-

Myth: Statistical Significance Implies Practical Relevance

- Reality: A statistically significant result does not guarantee that the effect is practically significant. For instance, a large sample size might yield a p-value < 0.05, but the actual effect size may be negligible.

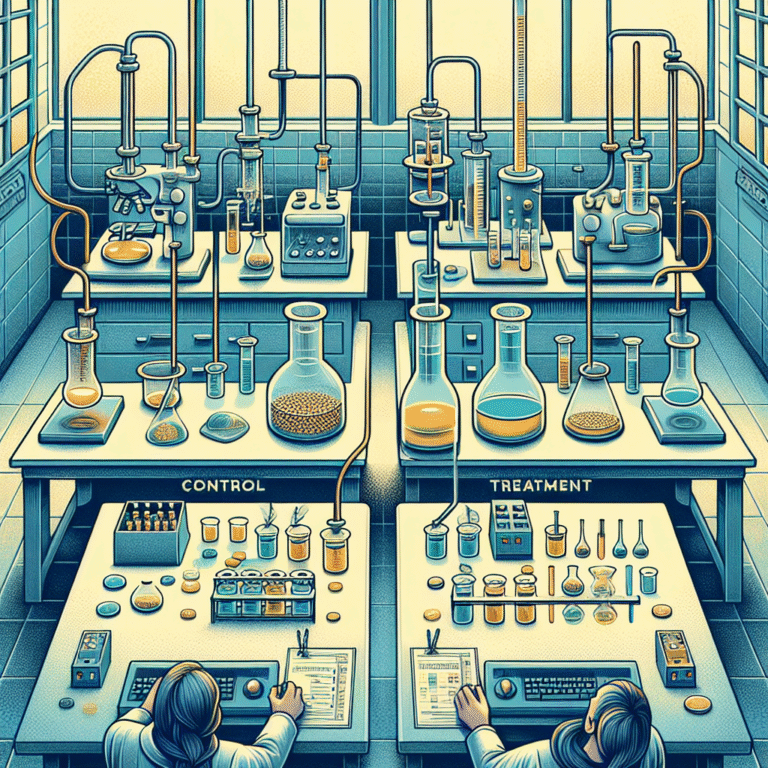

- Myth: If Hypothesis Testing is Performed, the Research is Validated

- Reality: Hypothesis tests alone cannot validate a research study. Designers need to ensure methodology, sampling, and analysis are thoroughly sound for valid conclusions.

Case Studies Illustrating Misunderstandings

Case Study 1: The Medical Field

In the 1990s, a widely-publicized study suggested a particular medication greatly reduced heart attack risk among patients. The p-value was reported at 0.04, leading to headlines celebrating the "miracle drug." However, upon closer inspection, the effect size of the medication was minimal, proving that while statistically significant, the findings were not practically impactful for patient care. This illustrates the perils of conflating statistical significance with meaningful clinical outcomes.

Case Study 2: Marketing Analytics

A marketing team used A/B testing to determine whether a new website layout improved conversion rates. The results showed a p-value of 0.03. However, the increase in conversion was only 0.1%. This prompted the team to roll out the new layout, based on statistically significant findings, yet the change did not lead to any substantial revenue growth. This shows that exploring the myths and misconceptions around statistical significance can lead to poor marketing decisions if not coupled with effect size analysis.

Critical Points of Statistical Testing: Navigating the Terrain

To effectively navigate the complex landscapes of statistical testing, understanding the key components is vital. Let’s explore a table that demonstrates common statistical terms in juxtaposition with their implications.

| Term | Definition | Misconception & Clarification |

|---|---|---|

| P-Value | Probability under null hypothesis | It does not reflect the size or importance of an effect. |

| Effect Size | Measure of the strength of the phenomenon | Statistical significance does not equate to large effect sizes. |

| Type I Error | Rejecting a true null hypothesis | Misinterpretation can lead to crediting findings that are mere chance outcomes. |

| Type II Error | Failing to reject a false null hypothesis | If results are not statistically significant, they might still suggest practical relevance. |

| Confidence Interval | Range of values that likely contain the true effect | Statistically significant results can yield wide confidence intervals, indicating uncertainty. |

Actionable Insights

How to Approach Statistical Significance Thoughtfully

-

Never Rely Solely on P-Values: Always consider effect sizes and confidence intervals alongside p-values to gain a clearer picture of your findings.

-

Contextualize Your Results: Step back and reflect on what your findings mean in practical terms as opposed to purely statistical ones. When exploring the myths and misconceptions around statistical significance, always consider the real-world applicability of your data.

-

Educate Stakeholders: When presenting statistical results, take the time to explain the nuances of significance and effect sizes to stakeholders. This helps foster informed decision-making.

-

Consider Predetermined Standards: Use standards tailored to your specific field—what’s accepted in healthcare may differ from marketing, emphasizing the importance of context.

- Investigate Further: If results are statistically significant but practically irrelevant, consider follow-up studies to probe further into the data for actionable insights.

Conclusion

Statistical significance is a powerful tool but shouldn’t be used as a one-size-fits-all metric for decision-making. In exploring the myths and misconceptions around statistical significance, it’s clear that understanding its limitations and contextualizing the results can lead to more conscientious and informed pathways in both research and practice.

As you move forward, remember that the strength of your findings lies as much in their statistical values as it does in their real-world applications. Armed with the right knowledge, you can approach data with a discerning eye, making impactful and ethical decisions.

FAQs About Statistical Significance

-

What does a p-value signify?

- A p-value indicates the probability of observing your results, or more extreme results, if the null hypothesis is true. A smaller p-value generally indicates stronger evidence against the null hypothesis.

-

Can we trust results with a p-value of 0.06?

- While not statistically significant by conventional standards, a p-value of 0.06 may still denote relevant findings, especially in exploratory research contexts.

-

Why is effect size important?

- Effect size quantifies the magnitude of the difference between groups, offering a perspective that doesn’t solely rely on p-values to convey the importance of findings.

-

How can I improve my statistical analysis?

- Engage in continuous education, utilize resources that emphasize proper statistical methods, and seek peer consultation to interpret results correctly.

- Are there alternatives to p-values?

- Yes, alternatives such as Bayesian statistics, confidence intervals, and effect sizes can provide richer insights and mitigate the limitations of traditional hypothesis testing.

In summary, as you embark on the journey of data analysis, remember that clear interpretations result from a combination of clarity in importance, context, and ethical considerations. The space of statistical significance holds both power and pitfalls, and it’s up to you to navigate them wisely.