Introduction: Hooking into Hypothesis Testing

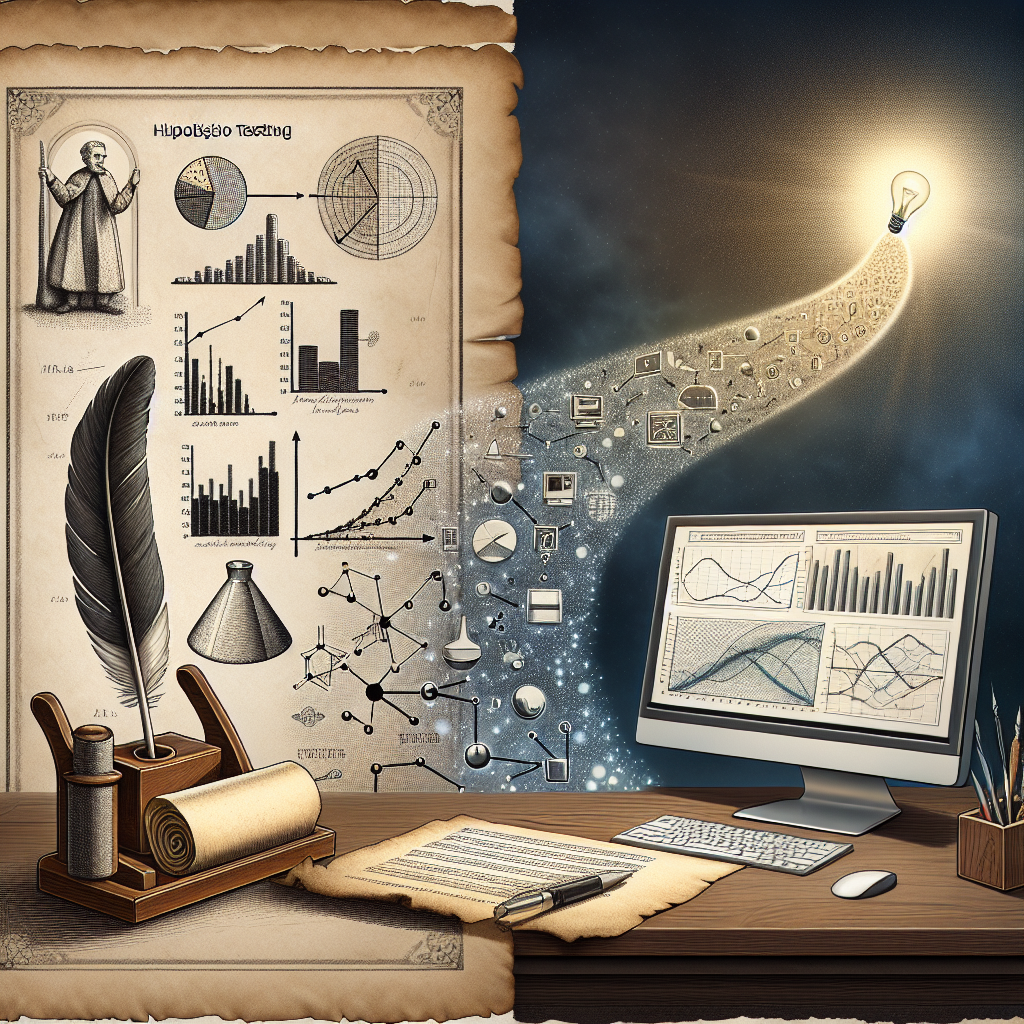

In the realm of statistics, hypothesis testing serves as a cornerstone for making informed decisions and evaluating theories against empirical evidence. It has evolved dramatically since its inception, transitioning from rudimentary methods to sophisticated, nuanced approaches that blend traditional techniques with cutting-edge technology. Understanding this journey—The Evolution of Hypothesis Testing: From Fisher to Modern Methods—is crucial for anyone looking to harness the power of data in today’s dynamic landscape.

This article delves into the rich history and transformation of hypothesis testing, examining key figures, methodologies, and applications that have shaped its current form. Whether you’re a statistician, researcher, or simply a curious learner, this comprehensive exploration will enlighten you on why hypothesis testing is an essential skill in various fields.

The Historical Context: Fisher’s Groundbreaking Contributions

1. The Birth of Hypothesis Testing

The evolution of hypothesis testing traces its roots back to Ronald A. Fisher in the early 20th century. In 1925, Fisher introduced the null hypothesis (H0) and alternative hypothesis (H1) framework. His work laid the groundwork for significance testing, a method that allows researchers to ascertain whether observations are due to chance or represent actual effects.

Fisher’s 1935 book, "The Design of Experiments," presented practical techniques and rigorous methodologies, inspiring generations of statisticians. His famous p-value, a measure of the strength of evidence against the null hypothesis, remains a staple in modern hypothesis testing.

Case Study: Fisher’s Experiment on Sweet Peas

Fisher’s use of sweet peas in experiments exemplifies the application of hypothesis testing. By manipulating environmental variables and measuring traits, he demonstrated how statistical principles could yield reliable conclusions about genetics. This experiment not only validated Fisher’s methodologies but also emphasized the importance of replication in scientific inquiry.

Analysis

Fisher’s experiments through the lens of hypothesis testing illustrated how rigorous statistical methods could lead to breakthroughs in biological sciences. His legacy is evident in the way hypothesis testing methodologies have been adopted across diverse fields, from agriculture to medicine.

2. The Ubiquitous t-tests

The introduction of the t-test by William Sealy Gosset, who published under the pseudonym "Student," greatly advanced Fisher’s concepts. In 1908, the t-test provided a robust way to compare means between small sample populations, addressing limitations in existing methodologies.

Case Study: Student’s t-test and Brewing

Gosset developed his t-test while working as a brewer for Guinness. He sought a method to ensure quality control in the brewing process, allowing for assessments of ingredients’ contribution to product variation. His work not only advanced statistical methods but also reinforced the practical importance of hypothesis testing in quality assurance.

Analysis

This case study reinforces the relevance of hypothesis testing within industries. By showcasing the impact of statistical methods on quality control, it illustrates how hypothesis testing can drive efficiency and innovation.

The Mid-20th Century: Expanding Horizons

3. The Statistical Revolution

As the mid-20th century approached, hypotheses testing underwent further refinements. The introduction of new concepts like power analysis, by Jacob Cohen in the 1960s, added depth to Fisher’s original ideas. Power analysis assesses a test’s ability to detect an effect when it exists, emphasizing the balance between Type I (false positive) and Type II (false negative) errors.

Case Study: Cohen’s Work on Power Analysis

Cohen’s seminal research on power analysis provided researchers with tools to determine sample sizes effectively, enhancing the rigour of experimental design in fields such as psychology and medicine.

Analysis

Cohen’s contributions highlight how hypothesis testing evolved beyond mere decision-making frameworks into more comprehensive tools that encourage methodical planning in research.

Modern Methods: Fusion of Tradition and Technology

4. The Rise of Bayesian Methods

In contrast to Fisher’s frequentist perspective, the Bayesian approach to hypothesis testing gained momentum in the latter part of the 20th century. Bayesian statistics incorporates prior knowledge into the analysis, allowing for more individualized conclusions based on the context of the data.

Case Study: Clinical Trials Using Bayesian Methods

In modern clinical trials, Bayesian methods have transformed how treatments are assessed. For instance, adaptive trials allow researchers to modify the study parameters based on interim results, improving flexibility and patient safety.

Analysis

The shift to Bayesian methods illustrates a significant transformation in hypothesis testing, providing researchers with dynamic tools for real-time decision-making, particularly valuable in fields where rapid evaluations are essential, such as healthcare.

5. Multivariate and Simultaneous Tests

As data complexity increased, the need for multivariate analysis and simultaneous testing emerged. Techniques such as MANOVA (Multivariate Analysis of Variance) and the Bonferroni correction allow researchers to test multiple hypotheses simultaneously while preserving significance levels.

Case Study: Social Sciences and Education

Multivariate testing methods have been particularly useful in social sciences for assessing various educational interventions simultaneously, providing a holistic view of their effectiveness.

Analysis

This application underscores The Evolution of Hypothesis Testing: From Fisher to Modern Methods, illustrating how understanding complexity can yield better insights and drive informed decisions in diverse fields.

Walking Through Current Challenges

6. Misinterpretations and Misuse

Despite the advancements in hypothesis testing, the misuse or misinterpretation of p-values and statistical significance persists. The replication crisis, where many studies fail to be replicated, highlights the need for a deeper understanding of hypothesis testing’s nuances.

Case Study: Psychological Research Failures

Several high-profile psychological studies have failed to replicate, raising questions about the validity of the initial findings. This exemplifies the crucial importance of robust experimental designs and appropriate statistical practices.

Analysis

This case study emphasizes the need for rigorous hypothesis testing methods and fosters dialogue on methodological improvements that can address common pitfalls in research.

Conclusion: Embracing the Future of Hypothesis Testing

The journey through The Evolution of Hypothesis Testing: From Fisher to Modern Methods highlights the remarkable progression of statistical methodologies. From Fisher’s foundational concepts to modern Bayesian approaches and multivariate analyses, the landscape of hypothesis testing is constantly evolving.

Researchers today must not only rely on these techniques but also understand the underlying principles to make informed decisions. By remaining open to adapt and innovate, we can harness the power of hypothesis testing to address modern challenges and pave the way for future discoveries.

FAQs

1. What is hypothesis testing?

Hypothesis testing is a statistical method used to make inferences about population parameters based on sample data. It involves establishing a null hypothesis and an alternative hypothesis, followed by statistical analysis to determine whether to reject the null hypothesis.

2. Why is the p-value important?

The p-value indicates the probability of observing results as extreme as those observed under the assumption that the null hypothesis is true. A low p-value suggests that the null hypothesis may not hold, thus providing evidence for the alternative hypothesis.

3. What are frequentist and Bayesian approaches?

Frequentist approaches rely on long-run probabilities and do not incorporate prior knowledge into analyses, while Bayesian methods allow for the integration of prior data or beliefs, resulting in a more dynamic and tailored statistical framework.

4. How do I interpret p-values correctly?

Interpreting p-values involves understanding their context. A p-value less than a predetermined alpha level (commonly 0.05) suggests statistical significance, but this doesn’t imply practical significance. Researchers must also consider effect sizes and the power of the study.

5. What are the implications of the replication crisis?

The replication crisis highlights concerns regarding the reliability of existing scientific findings. It emphasizes the need for rigorous experimental designs, transparent reporting, and understanding the limitations of hypothesis testing to improve research quality moving forward.

The evolution of hypothesis testing is an ongoing journey. By learning from its history and embracing new methodologies, researchers can contribute to a robust and credible body of knowledge that will continue to impact various fields of study.